CEPH Rebuilding OSD

Note

This is not official documentation for AutomationSuite

ceph OSD may get corrupted due to underlying hardware issue, hitting a edge case in ceph code path due a system event etc. A corrupted OSD may leave cluster in warning state as data is not fully replicated according the config provided.

In such case , any of the other healthy OSD can be used to sync the data into re-created OSD. Procedure to rebuild an PV backed OSD

- Disable self-heal for fabric-installer and rook application

kubectl -n argocd patch application fabric-installer --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

kubectl -n argocd patch application rook-ceph-operator --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

kubectl -n argocd patch application rook-ceph-object-store --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

- Scale down operator

kubectl -n rook-ceph scale --replicas=0 deploy/rook-ceph-opeator

- Get PVC name corresponding to crashing/corrupted OSD

kubectl -n rook-ceph get deploy <CORRUPTED_OSD_DEPLOYMENT> --show-labels

e.g

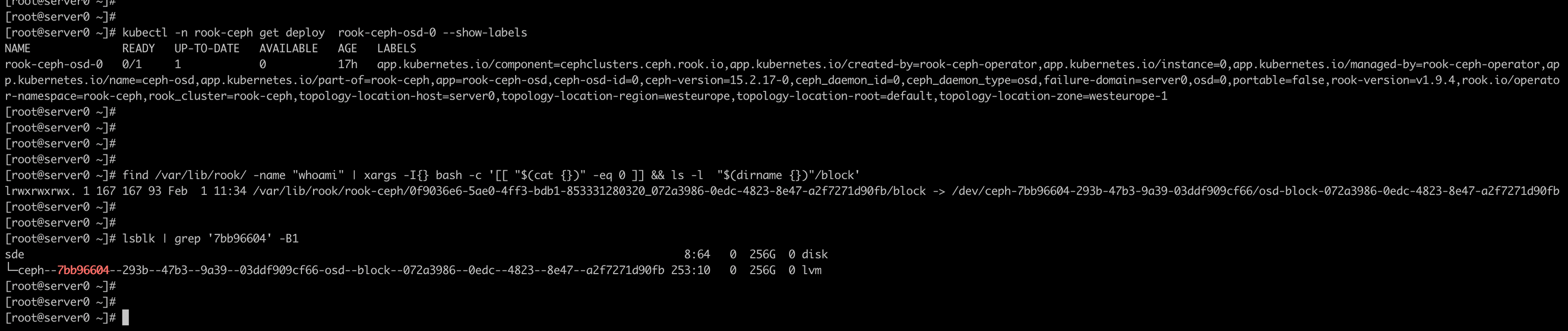

kubectl -n rook-ceph get deploy rook-ceph-osd-0 --show-labels

# Look for labels that starts with set1 , this is the PVC name we need to delete

- Scale down crashing/corrupted OSD

kubectl -n rook-ceph scale --replicas=0 deploy <CORRUPTED_OSD_DEPLOYMENT>

e.g

kubectl -n rook-ceph scale --replicas=0 deploy rook-ceph-osd-0

- Delete crashing/corrupted OSD PVC

kubectl -n rook-ceph delete pvc <PVC_NAME>

- Delete Crashing OSD deployment

kubectl -n rook-ceph delete deploy <CORRUPTED_OSD_DEPLOYMENT>

e.g

kubectl -n rook-ceph delete deploy rook-ceph-osd-0

- Start rook operator

kubectl -n rook-ceph scale --replicas=1 deploy/rook-ceph-opeator

-

Wait until new replacement OSD is created

-

Add step to remove old OSD from ceph cluster

- Mark OSD out

kubectl -n rook-ceph exec deploy/rook-ceph-tools -- ceph osd out osd.<ID>- Purge OSD

kubectl -n rook-ceph exec deploy/rook-ceph-tools -- ceph osd purge osd.<ID> --force --yes-i-really-mean-it

Tip

The procedure is almost same for raw device backed OSD except for deletion of PVC , we need to cleanup the raw device. To find the raw device being used by corrupted OSD

- Get OSD ID

kubectl -n rook-ceph get deploy <CORRUPTED_OSD_DEPLOYMENT> --show-labels

e.g

kubectl -n rook-ceph get deploy rook-ceph-osd-0 --show-labels

# Look for `ceph-osd-id` (In this case it is 0)

- Find OSD uuid

find /var/lib/rook/ -name "whoami" | xargs -I{} bash -c '[[ "$(cat {})" -eq <OSD_ID> ]] && ls -l "$(dirname {})"/block'

e.g

find /var/lib/rook/ -name "whoami" | xargs -I{} bash -c '[[ "$(cat {})" -eq 0 ]] && ls -l "$(dirname {})"/block'

- Find block device name

lsblk | grep '<FIRST_UUID_SECTION_FROM_LINKED_DEVICE>' -B1

e.g

lsblk | grep '7bb96604' -B1

So the device name is sde

- Clean up block device

sgdisk --zap-all <DEVICE_PATH>

dd if=/dev/zero of="DEVICE_PATH" bs=1M count=100 oflag=direct,dsync

blkdiscard <DEVICE_PATH>

ls /dev/mapper/ceph-* | grep '<FIRST_UUID_SECTION_FROM_LINKED_DEVICE>' | xargs -I% -- dmsetup remove %

rm -rf /dev/ceph-<FIRST_UUID_SECTION_FROM_LINKED_DEVICE>-*

e.g

sgdisk --zap-all /dev/sde

dd if=/dev/zero of=/dev/sde bs=1M count=100 oflag=direct,dsync

blkdiscard /dev/sde

ls /dev/mapper/ceph-* | grep '7bb96604' | xargs -I% -- dmsetup remove %

rm -rf /dev/ceph-7bb96604-*