Re-Create CEPH Cluster

Note

This is not official documentation for AutomationSuite

In certain situations we may need a way to re-create the ceph cluster. This section will guide you to on the same

- Disable self heal on argocd app

kubectl -n argocd patch application fabric-installer --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

kubectl -n argocd patch application rook-ceph-operator --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

kubectl -n argocd patch application rook-ceph-object-store --type=json -p '[{"op":"replace","path":"/spec/syncPolicy/automated/selfHeal","value":false}]'

- Delete ceph resources

kubectl -n rook-ceph delete deploy --all

k8s_resources_to_clean=(job pod serviceaccount service secret configmap persistentvolumeclaim)

for resource in "${k8s_resources_to_clean[@]}"; do

kubectl -n rook-ceph get "${resource}" --no-headers -o name | grep "${resource}" | xargs -r kubectl -n rook-ceph patch --type=json -p '[{"op":"remove" , "path": "/metadata/finalizers"}]' || true

kubectl -n rook-ceph get "${resource}" --no-headers -o name | grep "${resource}" | xargs -r kubectl -n rook-ceph delete --force --ignore-not-found

done

for dsPod in $(kubectl -n kube-system get pod -l app=longhorn-loop-device-cleaner -o name); do

kubectl -n kube-system exec "${dsPod}" -- rm -rf /var/lib/rook || true

done

- Re-create ceph cluster

argocd app sync rook-ceph-operator

argocd app sync rook-ceph-object-store

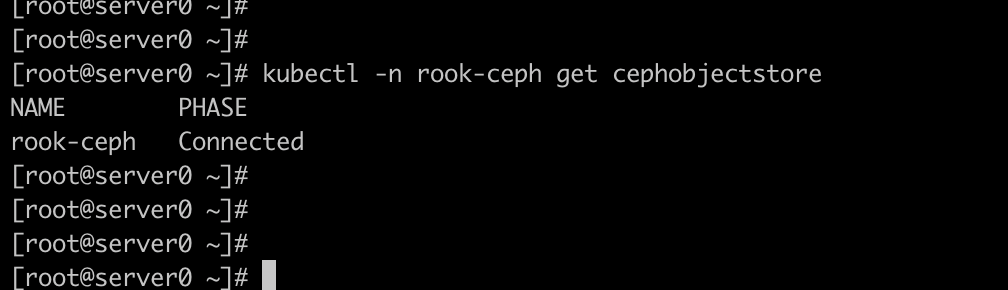

- Wait for ceph objectstore get re-created

kubectl -n rook-ceph get cephobjectstore

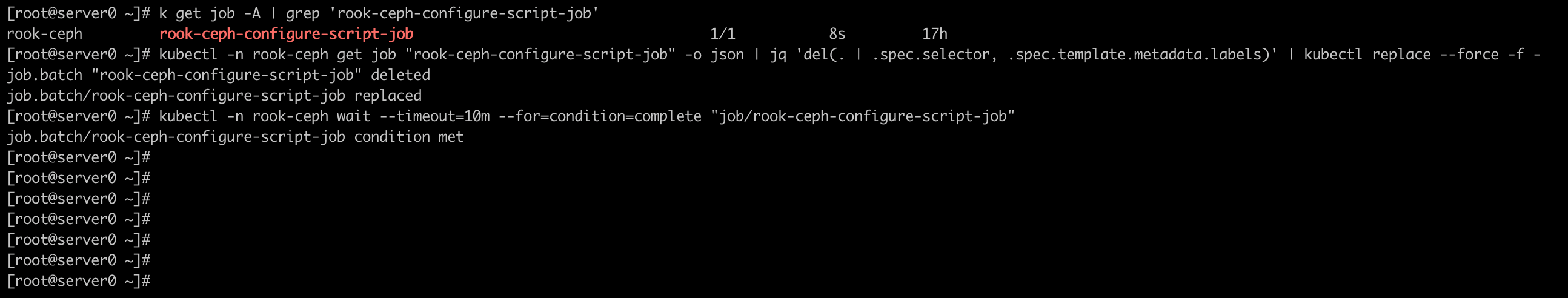

- Re-run rook-ceph-configure-script-job to create amin user

kubectl get job -A | grep 'rook-ceph-configure-script-job'

kubectl -n rook-ceph get job "rook-ceph-configure-script-job" -o json | jq 'del(. | .spec.selector, .spec.template.metadata.labels)' | kubectl replace --force -f -

kubectl -n rook-ceph wait --timeout=10m --for=condition=complete "job/rook-ceph-configure-script-job"

- Verify the admin credentials secret is created

kubectl -n "rook-ceph" get secret ceph-object-store-secret --show-labels | grep 'config-discovery=yes'

- Re-run credential manager job to create service specific bucket and users

kubectl -n uipath-infra get job "credential-manager-job" -o json | jq 'del(. | .spec.selector, .spec.template.metadata.labels)' | kubectl replace --force -f -

kubectl -n uipath-infra wait --timeout=10m --for=condition=complete "job/credential-manager-job"